What is HDR? High Dynamic Range TVs and phones explained

High Dynamic Range (HDR) has had the biggest impact on displays in the past ten years, but what does it mean for your viewing experience on TV screens and smartphones?

HDR first originated in the world of photography, and in that context is best described as a merging of several photos at different exposures to create one ‘high dynamic range’ photo.

Since its emergence, it’s become a fixture on TVs and smartphones, though the way it works on displays is different from photography.

What exactly is HDR, what does it do and how does it benefit what you watch?

What is HDR?

HDR stands for High Dynamic Range and refers to contrast (or difference) between the brightest and darkest parts of an image.

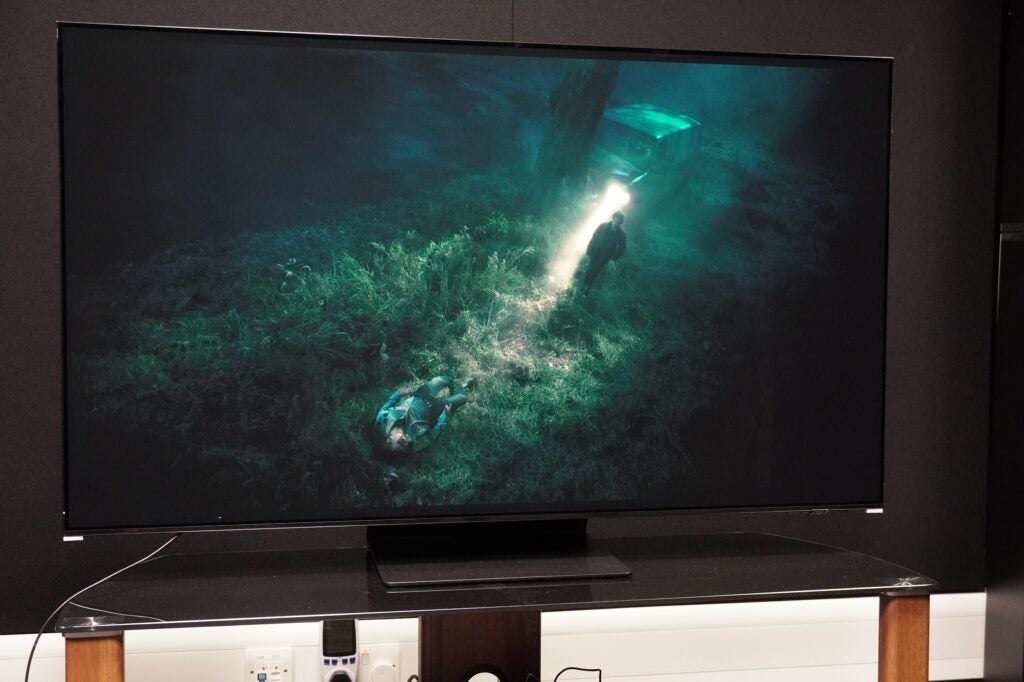

The idea is that your eyes can perceive whites that are brighter and blacks that are darker than traditional SDR displays have been able to show. HDR content preserves details in the darkest and brightest areas of a picture, details often lost using older imaging standards such as Rec.709.

HDR10 is the industry standard format, and all devices and content are required to support it. HDR10 sets the brightness for a programme’s entirety, so the level of brightness and darkness is the same throughout.

There are more advanced HDR formats in Dolby Vision and HDR10+, which are described as ‘dynamic’ forms of HDR. They use metadata to optimise an image either scene-by-scene or shot-by-shot for deeper blacks or brighter whites.

What makes a screen a HDR TV?

The contrast ratio is one of the most important factors to consider for HDR.

The contrast ratio is the difference between brightest and darkest parts of an image – the greater the difference between light and dark, the greater the contrast. A poor contrast ratio results in blacks that look grey and whites that look dull.

We can measure contrast through brightness (also known as luminance), which refers to how bright a TV can go and is measured in ‘nits’. One nit is the equivalent to one candela (one candlepower) per square metre (1cd/m2). Around 500 – 600 nits of peak brightness are considered enough to convey the effects of HDR for a TV.

The flip side of the coin is black level. Black level refers to how dark an image can go and is also measured in nits. For example, a TV could have a brightness of 400 nits and a black level of 0.4 nits. Strong black levels and high brightness combine for a bigger contrast ratio, resulting in better picture.

To convey HDR content, a TV must be able to process what’s known as 10-bit or ‘deep’ colour – a signal that features over a billion possible colours. Compare that to SDR (Standard Definition Range) which uses 8-bit colour (16 million possible colours).

HDR TVs with 10-bit colour can produce a wider range of colours, reducing overtly obvious gradations (or changes) between different colours. Subtle shading helps make a scene look more realistic.

However, just to confuse matters, a TV doesn’t need to display all the colours in a 10-bit signal. It just has to be able to process the signal and produce an image based on that information. For example, TVs from Hisense have 8-bit panels with FRC (frame rate control) to deliver the extra colours.

What is DCI-P3 colour?

A HDR TV must reproduce a certain amount of the ‘P3’ colour or DCI-P3 colour standard. DCI-P3 is a colour space most widely known for its use in digital film projection. The DCI-P3 colour space is larger than what SDR TVs use – Rec.709 – which means it can display more colours.

In general, it refers to the range of the colour spectrum, and to display HDR images well, a TV display must cover as much of the P3 colour space as possible. The guidance for the UHD Premium format says more than 90% of the P3 colour space must be covered.

A HDR TV that can cover this amount within the DCI-P3 colour spectrum will be able to display more colours and display HDR content more effectively. Manufacturers don’t always reveal this information, but you can find results of DCI-P3 coverage in reviews online.

How do I know if a TV is HDR compatible?

Most TVs sold are HDR enabled. If you’re unsure, check online to see what HDR formats a TV supports or you can read our reviews.

Cheaper TV sets are capable of limited brightness. TVs such as Toshiba UK4D can only hit a peak brightness of around 350 nits. Highlights (the brightest parts of the image) aren’t as bright, and black levels aren’t as deep either.

The further up the chain you go, the brighter a TV can get. Samsung’s Mini LED TVs deliver some of the brightest images on the market, hitting over 2000 nits. If you want to see the benefits of HDR in action, we’d suggest a TV that out at least 500 – 600 nits.

What is HDR10?

Announced in 2015, HDR10 uses the wide colour gamut, Rec.2020 colour space and a bit-depth of 10-bits.

It’s an open format that anyone can use, and for displays it requires a HDMI 2.0a connection to carry the signal from an external source (like a streaming stick or 4K Blu-ray player).

HDR10 content is mastered at 1000 nits, and unlike other forms of HDR it’s static, so the levels of brightness and darkness stay the same across the running time of a film or programme.

But HDR10 allows for greater contrast than SDR and with WCG (Wide Colour Gamut), HDR10 can display more colours, though not all TVs support WCG, especially budget sets.

What is HDR10 Plus?

HDR10+ is an open standard adopted by several TV manufacturers (such as Samsung, Philips, and Panasonic) and video streaming services such as Amazon Prime Video. It differs from HDR10 as it uses dynamic instead of static metadata.

That means it can adapt the brightness and black levels for individual scenes or frames. For example, if a scene was intended to be depicted with limited light, HDR10+ can tell the TV screen to reduce the level or brightness for that specific scene.

Though HDR10+ achieves the same objective as Dolby Vision, it offers more freedom for different displays to use their own strengths and processing while Dolby Vision is stricter in its instructions.

There is not as much content available in HDR10+ as there is in Dolby Vision, though Prime Video and Rakuten TV streaming services support it, and many 4K Blu-rays carry it too.

What is Dolby Vision?

Dolby Vision is similar to HDR10+ in that it adds a layer of dynamic metadata to the HDR signal. This dynamic metadata carries scene-by-scene or frame-by-frame instructions as to how a TV should present its HDR performance, improving brightness, contrast, detailing and colour reproduction.

It’s used by Hollywood filmmakers to grade films, as well as in cinemas. It’s more widespread than HDR10+ as more TV brands and streaming services backing it. The only significant holdout remains Samsung, which prefers to use HDR10+.

Dolby Vision’s assistance bring benefits to cheaper sets, as it can offer improved tone-mapping. Tone-mapping is the way in which a TV can adapt the image to match the display’s capabilities.

Dolby has also brought out Dolby Vision IQ that optimises HDR content to match the lighting conditions in a room. The result is the viewer can pick out detail in the darker and brighter parts of an image that would otherwise be washed out by ambient light.

What is HLG?

HLG stands for Hybrid Log Gamma and is used by broadcast services. It adds metadata to the signal so content can achieve greater contrast, brightness, and truer colours.

HLG was developed by the BBC and NHK (the Japanese national broadcaster) to display a wide dynamic range while also remaining compatible with SDR transmission standards.

What that means is viewers without a HDR TV can receive the same broadcast feed instead of a separate one, with content automatically downsampled to be compatible with SDR displays.

This makes HLG a backwards compatible and cost-effective HDR format for content creators who don’t have to produce two feeds for different TVs. BBC iPlayer uses it, as does Sky for sports such as football and F1.

What about HDR on smartphones?

Phones and tablets feature brighter displays, and with people watching more content on their mobile devices, there’s been an effort to support HDR-compatible versions of streaming services such as Netflix, Disney+ and Prime Video.

HDR on phones can’t have the same impact as a good TV display, but it can make a difference with punchier colours and better contrast.

The number of HDR-compatible smartphones is always increasing, and you’ll find smartphones from Samsung, Sony, Motorola, OnePlus, and Apple to name a few that support various HDR formats. Many smartphone brands support HDR10+, while Apple’s iPhones feature Dolby Vision.

Should you buy an HDR TV?

The quality of the HDR performance varies as cheaper TVs aren’t bright enough to do HDR justice. The further up the ladder you go, the better the HDR experience will be. As most TVs sold support HDR in some way, the question is not whether you should buy a HDR TV, but which one.

These days HDR performance carries more importance than resolution. Before purchasing a HDR TV, you should carry out research on the product to ensure you are getting the HDR experience you want.

We regularly mention in our reviews the brightness of TVs, its performance and what HDR formats are supported so you’re not left in the dark as to the kind of viewing experience you’ll be getting. A good HDR TV can make the viewing experience much more immersive, and if that’s what you want, there are plenty available – though you will have to cough up the money to get it.